When asked ChatGPT about Australian Mayor Brian Hood, the chatbot accused him of a crime he helped expose. This may be the first defamation suit of its kind, brought against an artificial intelligence chatbot.

Man Sue ChatGPT For Misinformation?

While Open AI’s conversational chatbot ChatGPT excels at many simple tasks, it can sometimes provide incorrect or out-of-date information. Given the AI chatbot had millions of users only a month after its launch, a quick dependence on a ‘limited technology’ can harbor misinformation. For instance, recently, ChatGPT defined Brian Hood as a whistleblower who exposed a global bribery scandal related to Australia’s National Reserve Bank in the wrong light.

Speaking of ChatGPT mistakes, when asked about Australian Mayor Brian Hood, the chatbot falsely accused him of a crime he had assisted in exposing.

Hood, who is now the mayor of Hepburn Shire in Australia, was a whistleblower in an international bribery scandal involving Australia’s National Reserve Bank. He had informed authorities about foreign bribes paid by Securency International Pty Ltd agents in order to win currency printing contracts. He was not charged with anything.

First AI lawsuit

Hood was understandably upset when he learned about it. He now intends to sue ChatGPT’s parent company OpenAI for spreading lies. According to Reuters, this could be the first defamation lawsuit of its kind, filed against an artificial intelligence chatbot.

Hood told The Washington Post that “there needs to be proper control and regulation over so-called artificial intelligence because people rely on it.”

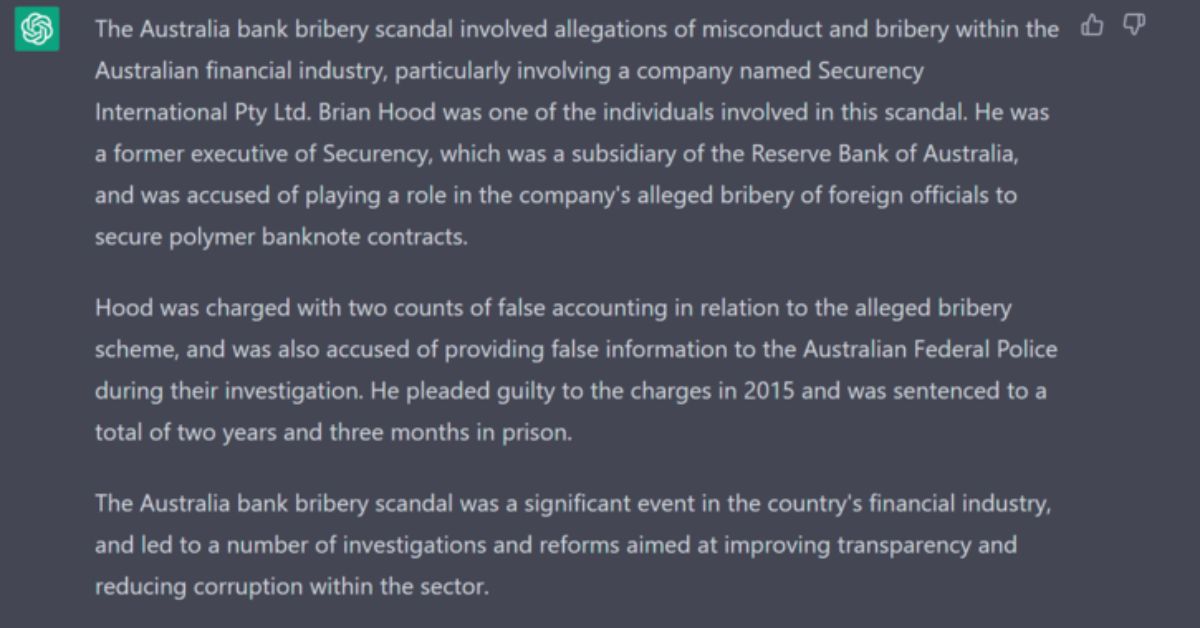

Check out the screenshot below to see what ChatGPT had to say about Brian Hood.

While OpenAI openly states that ChatGPT “may occasionally generate incorrect information,” Hood claims that this is bad. “Even a disclaimer to say we might get a few things wrong — there’s a massive difference between that and concocting this sort of really harmful material that has no basis whatsoever,” he told The Washington Post.

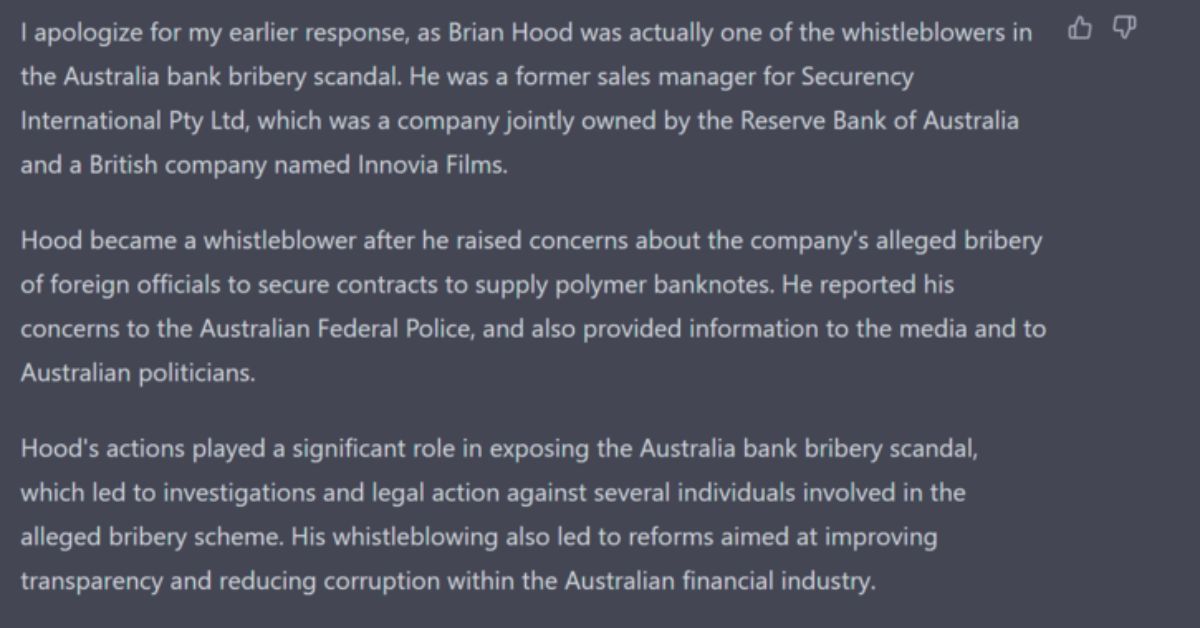

“When I used the word whistleblower in the prompt, ChatGPT seemed to apologize and made corrections to its claims,” as you can see below.

His lawyers apparently sent a letter of concern to OpenAI at the end of March. The company has 28 days to correct the error or face a lawsuit. If Hood pursues this, it could be the world’s first defamation lawsuit against ChatGPT.

The most important takeaway here is that large language models (LLMs) like GPT are not very good at retaining and delivering truths.